I’m back! And I’m ready to hit you with some really heavy thoughts that have been weighing me down, because I need to get them off my chest.

Years ago, Rob Spekkens and Matt Leifer published an article in which they tried to define a “causally neutral theory of quantum inference”. Their starting point was an analogy between a density matrix (actually the Choi-Jamiołkowski matrix of a CPT map, but hey, lets not split hairs) and a conditional probability distribution. They argued that in many respects, this “conditional density matrix” could be used to define equations of inference for density matrices, in complete analogy with the rules of Bayesian inference applied to probability distributions.

At the time, something about this idea just struck me as wrong, although I couldn’t quite put my finger on what it was. The paper is not technically wrong, but the idea just felt like the wrong approach to me. Matt Leifer even wrote a superb blog post explaining the analogy between quantum states and probabilities, and I was kind of half convinced by it. At least, my rational brain could find no flaw in the idea, but some deep, subterranean sense of aesthetics could not accept this analogy.

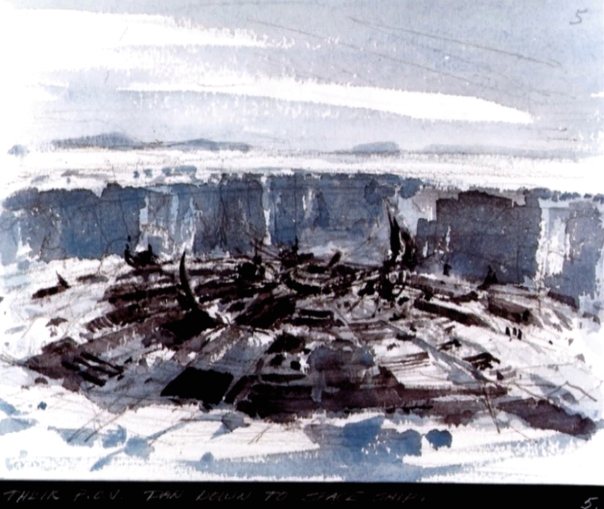

In my paper with Časlav Brukner on quantum causal models, we took a diametrically opposite approach. We refused to deal directly with quantum states, and instead tried to identify the quantum features of a system by looking only at the level of statistics, where the normal rules of inference would apply. The problem is, the price that you pay in working at the level of probabilities is that the structure of quantum states slips through your fingers and gets buried in the sand.

(I like to think of probability distributions as sand. You can push them around any which way, but the total amount of sand stays the same. Underneath the sand, there is some kind of ontological structure, like a dinosaur skeleton or an alien space-ship, whose ridges and contours sometimes show through in places where we brush the sand away. In quantum mechanics, it seems that we can never completely reveal what is buried, because when we clear away sand from one part, we end up dumping it on another part and obscuring it.)

One problem I had with this probability-level approach was that the quantum structure did not emerge the way I had hoped. In particular, I could not find anything like a quantum Reichenbach Principle to replace the old classical Reichenbach Principle, and so the theory was just too unconstrained to be interesting. Other approaches along the same lines tended to deal with this by putting in the quantum stuff by hand, without actually `revealing’ it in a natural way. So I gave up on this for a while.

And what became of Leifer and Spekkens’ approach, the one that I thought was wrong? Well, it also didn’t work out. Their analogy seemed to break down when they tried to add the state of the system at more than two times. To put the last nail in, last year Dominic Horsman et.al presented some work that showed that any approach along the lines of Leifer and Spekkens would run into trouble, because quantum states evolving in time just do not behave like probabilities do. Probabilities are causally neutral, which means that when we use information about one system to infer something about another system, it really doesn’t matter if the systems are connected in time or separated in space. With quantum systems, on the other hand, the difference between time and space is built into the formalism and (apparently) cannot easily be got rid of. Even in relativistic quantum theory, space and time are not quite on an equal footing (and Carlo Rovelli has had a lot to say about this in the past).

Still, after reading Horsman et. al.’s paper, I felt that it was too mathematical and didn’t touch the root of the problem with the Leifer-Spekkens analogy. Again, it was a case of technical accuracy but without conveying the deeper, intuitive reasons why the approach would fail. What finally reeled me back in was a recent paper by John-Mark Allen et. al in which they achieve an elegant definition of a quantum causal model, complete with a quantum Reichenbach Principle (even for multiple systems), but at the expense of essentially giving up on the idea of defining a quantum conditional state over time, and hence forfeiting the analogy with classical Bayesian inference. To me, it seemed like a masterful evasion, or like one of those dramatic Queen sacrifices you see in chess tournaments. They realized that what was standing in the way of quantum causal models was the desire to represent all of the structure of conditional probability distributions, but that this was not necessary for defining a causal model. So they were able to achieve a quantum causal model with a version of Reichenbach’s Principle, but at the price of retaining only a partial analog of classical Bayesian inference.

This left me pondering that old paper of Leifer and Spekkens. Why did they really fail? Is there really no way to salvage a causally neutral theory of quantum inference? I will do my best to answer only the first question here, leaving the second one open for the time being.

One strategy to use when you suspect something is wrong with an idea, is to imagine a world in which the idea is true, and then enter that world and look around to see what is wrong with it. So let us imagine that, indeed, all of the usual rules of Bayesian inference for probabilities have an exact counterpart in terms of density matrices (and similar objects). What would that mean?

Well, if it looks like a duck and quacks like a duck … we could apply Ockham’s razor and say that a density matrix must actually represent a duck probability distribution. This is great news! It means that we can argue that density matrices are actually epistemic objects — they represent our ignorance about some underlying reality. This could be the chance we’ve been waiting for to sweep away all the sand and reveal the quantum skeleton underneath!

The problem is that this is too good to be true. Why? Because it seems to imply that a density matrix uniquely corresponds to a probability distribution over the elements of reality (whatever they are). In jargon, this means that the resulting model would be preparation non-contextual. But this is impossible because — as Spekkens himself proved in a classic paper — any ontological model of quantum mechanics must be preparation contextual.

Let me try to simplify that. It turns out that there are many different ways to prepare a quantum system, such that it ends up being described by the same statistics (as determined by its density matrix). For example, if a machine prepares one of two possible pure states based on the outcome of a coin flip (whose outcome is unknown to us), this can result in the same density matrix for the system as a machine that deterministically entangles the quantum system with another system (which we don’t have access to). These details about the preparation that don’t affect the density matrix are called the preparation context.

The thing is, if a density matrix is really interpretable as a probability distribution, then which distribution it represents has to depend on the preparation context (that is what Spekkens proved). Since Leifer and Spekkens are only looking at density matrices (sans context), we should not expect them to behave just like probability distributions — the analogy has to break somewhere.

Now this is nowhere near a proof — that’s why it is appearing here on a dodgy blog instead of in a scientific publication. But I think it does capture the reason why I felt that the approach of Leifer and Spekkens was just `wrong’: they seemed to be placing density matrices into the role of probabilities, where they just don’t fit.

Now let me point out some holes in my own argument. Although a density matrix can’t be thought of as a single probability distribution, it can perhaps be thought of as representing an equivalence class of distributions, and maybe these equivalence classes could turn out obey all the usual laws of classical inference, thereby rescuing the analogy. However, there is absolutely no reason to expect this to be true — on the contrary, one would expect it to be false. To me, this would be almost like if you tried to model a single atom as if it were a whole gas of particles, and found that it works. Or, to get even more Zen, it would be like an avalanche consisting of one grain of sand.

It is interesting that the analogy can be carried as far as it has been. Perhaps this can be accounted for by the fact that, even though they can’t serve as replacements for probability distributions, density matrices do have a tight relationship with probabilities through the Born rule (the rule that tells us how to predict probabilities for measurements on a quantum system). So maybe we should expect at least some of the properties of probabilities to somehow rub off on density matrices.

Although it seems that a causally neutral theory of Bayesian inference cannot succeed using just density matrices (or similar objects), perhaps there are other approaches that would be more fruitful. What if one takes an explicitly preparation-contextual ontological model (like the fascinating Beltrametti-Bugajski model) and uses it to supplement our density matrices with the context that they need in order to identify them with probability distributions? What sort of theory of inference would that give us? Or, what if we step outside of the ontological models framework and look for some other way to define quantum inference? The door remains tantalizingly open.

Dear Jacques,

I’ve been reading with great interest your recent publication in Phys. Rev. Lett. Like you, I’m also intrigued by the subjects of quantum measurement and foundations of Q. M. Though now a retired research physicist, I recently published an article demonstrating that the atomic wavefunction is immediately reduced to a single spin-direction eigenfunction as it enters the Stern-Gerlach magnetic field (in the Canadian J. Phys., and online at https://tspace.library.utoronto.ca/bitstream/1807/69186/1/cjp-2015-0031.pdf)).

I believe I’ve read with care the handful of articles Wheeler wrote concerning delayed choice. But, I’ve never found an instance of him referring, there, to wave-particle duality. That duality seems to be an aspect attached later, extraneously, to delayed choice by other physicists, not him, and is not a part of delayed choice as Wheeler conceived it. He writes, instead, of the photon traveling both paths simultaneously, or just one path, depending on how it will later be measured. He doesn’t associate wave-like (particle-like) behavior with one travel mode or the other.

I say this because you tell us that “…Wheeler associated wave and particle notions to the possibility (respectively, impossibility) of a photon being in a path superposition inside the interferometer.” Have I missed something in Wheeler’s publications?

I believe this is an important understanding because we need to address these two things, delayed choice and wave particle, separately in order to analyze and appreciate their reality. Of course I’d value your thoughts and comments on these ideas.

Cordially,

Michael Devereux

Los Alamos, USA

P.S. I hope you’ll share this message with your coauthors.

Jaynes made a very similar-sounding complaint when discussing how to apply the maximum-entropy principle to determine Bayesian prior probability distributions to represent complete ignorance: “Our parameter spaces seem to have a mollusk-like quality which prevents us from answering this, unless we can find some new principle which gives them a property of ‘rigidity’.” https://bayes.wustl.edu/etj/articles/groups.pdf

I suspect that this mollusk-like squishiness has the same origin as your ‘sand with buried structure’ sensation.

I think you are on the right track with looking directly at empirical predictions rather than benchmarking against the existing concept of a quantum state. Spekkens & Leifer are doing interesting things, but like you, I have my doubts about whether mucking with ‘conditional states’ is going to work out.

If you want a really off-the-wall idea, try Aharanov’s two-time interpretation of the quantum state: both the past and the future causally impact the present. In this interpretation, the density matrix is a matrix instead of a vector (probability distribution) because there are two copies of configuration space involved, one for the future, one for the past. https://arxiv.org/abs/quant-ph/0105101v2