(Note: this blog post is vaguely related to a paper I wrote. You can find it on the arXiv here. )

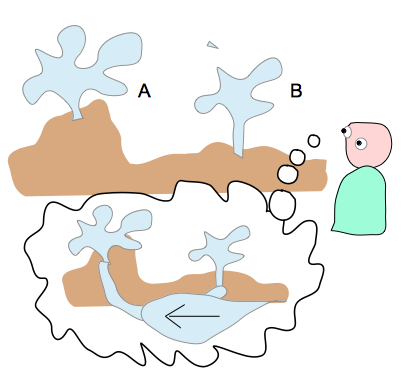

Suppose you are walking along the beach, and you come across two holes in the rock, spaced apart by some distance; let us label them ‘A’ and ‘B’. You observe an interesting correlation between them. Every so often, at an unpredictable time, water will come spraying out of hole A, followed shortly after by a spray of water out of hole B. Given our day-to-day experience of such things, most of us would conclude that the holes are connected by a tunnel underneath the rock, which is in turn connected to the ocean, such that a surge of water in the underground tunnel causes the water to spray from the two holes at about the same time.

Now, therein lies a mystery: how did our brains make this deduction so quickly and easily? The mere fact of a statistical correlation does not tell us much about the direction of cause and effect. Two questions arise. First, why do correlations require explanations in the first place? Why can we not simply accept that the two geysers spray water in synchronisation with each other, without searching for explanations in terms of underground tunnels and ocean surges? Secondly, how do we know in this instance that the explanation is that of a common cause, and not that (for example) the spouting of water from one geyser triggers some kind of chain reaction that results in the spouting of water from the other?

The first question is a deep one. We have in our minds a model of how the world works, which is the product partly of history, partly of personal experience, and partly of science. Historically, we humans have evolved to see the world in a particular way that emphasises objects and their spatial and temporal relations to one another. In our personal experience, we have seen that objects move and interact in ways that follow certain patterns: objects fall when dropped and signals propagate through chains of interactions, like a series of dominoes falling over. Science has deduced the precise mechanical rules that govern these motions.

According to our world-view, causes always occur before their effects in time, and one way that correlations can arise between two events is if one is the cause of the other. In the present example, we may reason as follows: since hole B always spouts after A, the causal chain of events, if it exists, must run from A to B. Next, suppose that I were to cover hole A with a large stone, thereby preventing it from emitting water. If the occasion of its emission were the cause of hole B’s emission, then hole B should also cease to produce water when hole A is covered. If we perform the experiment and we find that hole B’s rate of spouting is unaffected by the presence of a stone blocking hole A, we can conclude that the two events of spouting water are not connected by a direct causal chain.

The only other way in which correlations can arise is by the influence of a third event — such as the surging of water in an underground tunnel — whose occurrence triggers both of the water spouts, each independently of the other. We could promote this aspect of our world-view to a general principle, called the Principle of the Common Cause (PCC): whenever two events A and B are correlated, then either one is a cause of the other, or else they share a common cause (which must occur some time before both of these events).

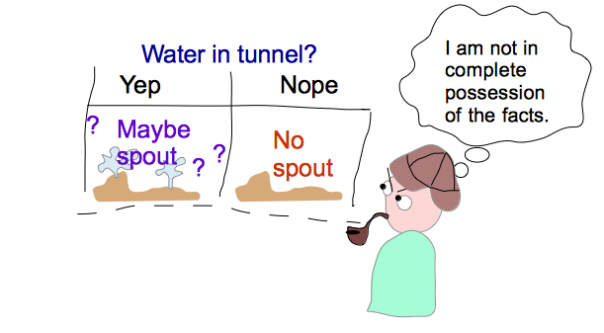

The Principle of Common Cause tells us where to look for an explanation, but it does not tell us whether our explanation is complete. In our example, we used the PCC to deduce that there must be some event preceding the two water spouts which explains their correlation, and for this we proposed a surge of water in an underground tunnel. Now suppose that the presence of water in this tunnel is absolutely necessary in order for the holes to spout water, but that on some occasions the holes do not spout even though there is water in the tunnel. In that case, simply knowing that there is water in the tunnel does not completely eliminate the correlation between the two water spouts. That is, even though I know there is water in the tunnel, I am not certain whether hole B will emit water, unless I happen to know in addition that hole A has just spouted. So, the probability of B still depends on A, despite my knowledge of the ‘common cause’. I therefore conclude that I do not know everything that there is to know about this common cause, and there is still information to be had.

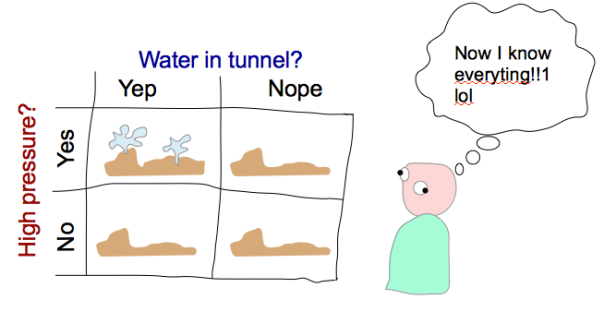

It could be, for instance, that the holes will only spout water if the water pressure is above a certain threshold in the underground tunnel. If I am able to detect both the presence of the water and its pressure in the tunnel, then I can predict with certainty whether the two holes will spout or not. In particular, I will know with certainty whether hole B is going to spout, independently of A. Thus, if I had stakes riding on the outcome of B, and you were to try and sell me the information “whether A has just spouted”, I would not buy it, because it does not provide any further information beyond what I can deduce from the water in the tunnel and its pressure level. It is a fact of general experience that, conditional on complete knowledge of the common causes of two events, the probabilities of those events are no longer correlated. This is called the principle of Factorisation of Probabilities (FP). The union of FP and PCC together is called Reichenbach’s Common Cause Principle (RCCP).

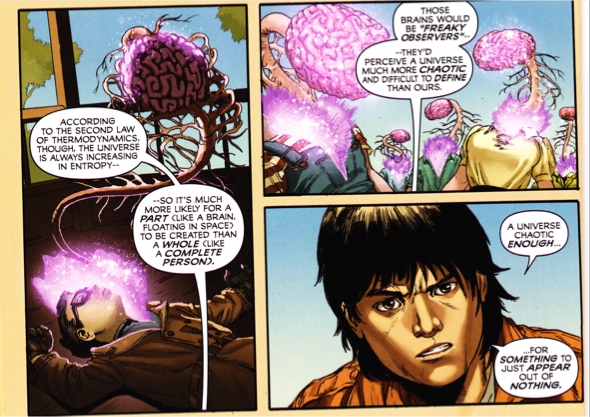

In the above example, the complete knowledge of the common cause allowed me to perfectly determine whether the holes would spout or not. The conditional independence of these two events is therefore guaranteed. One might wonder why I did not talk about the principle of predetermination: conditional on on complete knowledge of the common causes, the events are determined with certainty. The reason is that predetermination might be too strong; it may be that there exist phenomena that are irreducibly random, such that even a full knowledge of the common causes does not suffice to determine the resulting events with certainty.

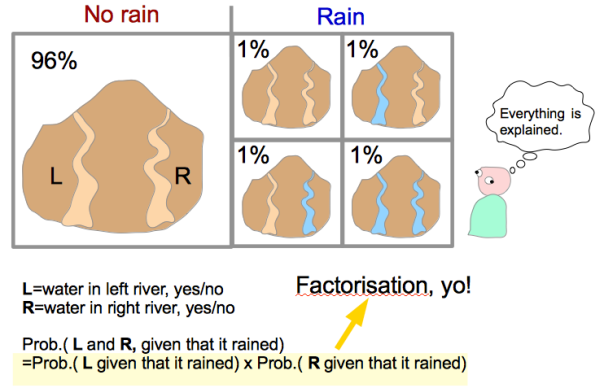

As another example, consider two river beds on a mountain slope, one on the left and one on the right. Usually (96% of the time) it does not rain on the mountain and both rivers are dry. If it does rain on the mountain, then there are four possibilities with equal likelihood: (i) the river beds both remain dry, (ii) the left river flows but the right one is dry (iii) the right river flows but the left is dry, or (iv) both rivers flow. Thus, without knowing anything else, the fact that one river is running makes it more likely that the other one is. However, conditional that it rained on the mountain, if I know that the left river is flowing (or dry), this does not tell me anything about whether the right river is flowing or dry. So, it seems that after conditioning on the common cause (rain on the mountain) the probabilities factorise: knowing about one river tells me nothing about the other.

Now we have a situation in which the common cause does not completely determine the outcomes of the events, but where the probabilities nevertheless factorise. Should we then conclude that the correlations are explained? If we answer ‘yes’, we have fallen into a trap.

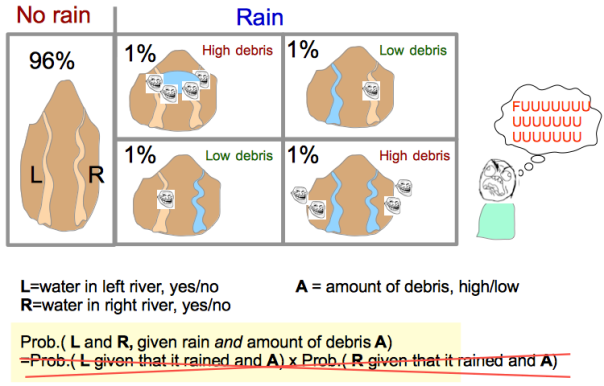

The trap is that there may be additional information which, if discovered, would make the rivers become correlated. Suppose I find a meeting point of the two rivers further upstream, in which sediment and debris tends to gather. If there is only a little debris, it will be pushed to one side (the side chosen effectively at random), diverting water to one of the rivers and blocking the other. Alternatively, if there is a large build-up of debris, it will either dam the rivers, leaving them both dry, or else be completely destroyed by the build-up of water, feeding both rivers at once. Now, if I know that it rained on the mountain and I know how much debris is present upstream, knowing whether one river is flowing will provide information about the other (eg. if there is a little debris upstream and the right river is flowing, I know the left must be dry).

Before I knew anything, the rivers seemed to be correlated. Conditional on whether it rained on the mountain-top, the correlation disappeared. But now, conditional that it rained on the mountain and on the amount of debris upstream, the correlation is restored! If the only tools I had to explain correlations was the PCC and the FP, then how can I ever be sure that the explanation is complete? Unless the information of the common cause is enough to predetermine the outcomes of the events with certainty, there is always the possibility that the correlations have not been explained, because new information about the common causes might come to light which renders the events correlated again.

Now, at last, we come to the main point. In our classical world-view, observations tend to be compatible with predetermination. No matter how unpredictable or chaotic a phenomenon seems, we find it natural to imagine that every observed fact could be predicted with certainty, in principle, if only we knew enough about its relevant causes. In that case, we are right to say that a correlation has not been fully explained unless Reichenbach’s principle is satisfied. But this last property is now just seen as a trivial consequence of predetermination, implicit in out world-view. In fact, Reichenbach’s principle is not sufficient to guarantee that we have found an explanation. We can only be sure that the explanation has been found when the observed facts are fully determined by their causes.

This poses an interesting problem to anyone (like me) who thinks the world is intrinsically random. If we give up predetermination, we have lost our sufficient condition for correlations to be explained. Normally, if we saw a correlation, after eliminating the possibility of a direct cause we would stop searching for an explanation only when we found one that could perfectly determine the observations. But if the world is random, then how do we know when we have found a good enough explanation?

In this case, it is tempting to argue that Reichenbach’s principle should be taken as a sufficient (not just necessary) condition for an explanation. Then, we know to stop looking for explanations as soon as we have found one that causes the probabilities to factorise. But as I just argued with the example of the two rivers, this doesn’t work. If we believed this, then we would have to accept that it is possible for an explained correlation to suddenly become unexplained upon the discovery of additional facts! Short of a physical law forbidding such additional facts, this makes for a very tenuous notion of explanation indeed.

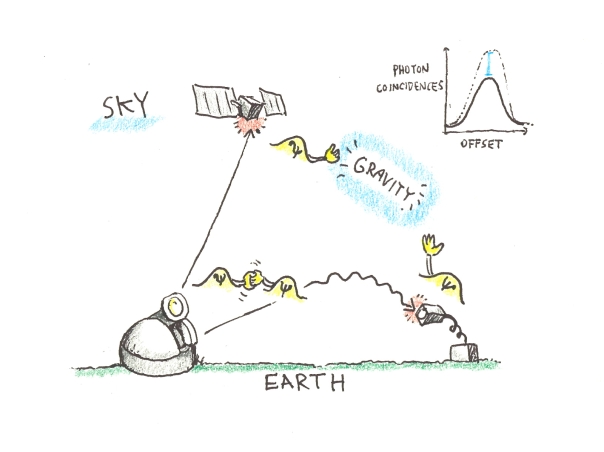

The question of what should constitute a satisfactory explanation for a correlation is, I think, one of the deepest problems posed to us by quantum mechanics. The way I read Bell’s theorem is that (assuming that we accept the theorem’s basic assumptions) quantum mechanics is either non-local, or else it contains correlations that do not satisfy the factorisation part of Reichenbach’s principle. If we believe that factorisation is a necessary part of explanation, then we are forced to accept non-locality. But why should factorisation be a necessary requirement of explanation? It is only justified if we believe in predetermination.

A critic might try to argue that, without factorisation, we have lost all ability to explain correlations. But I’m saying that this true even for those who would accept factorisation but reject predetermination. I say, without predetermination, there is no need to hold on to factorisation, because it doesn’t help you to explain correlations any better than the rest of us non-determinists! So what are we to do? Maybe it is time to shrug off factorisation and face up to the task of finding a proper explanation for quantum correlations.